These days I do minimal networking, but my college diploma is in an IP Engineering discipline, and prior to that, I had the opportunity to complete the Cisco Networking Academy curriculum in high school. All of that to say that while I’ve not pursued that field, I have a decent foundation and understanding on how those things operate. At work, I have my hand in firewalls, switches, DNS, DHCP, routing, subnetting and other wonderful things, but it’s not super advanced work.

For about 8 months now, we’ve had a dedicated link setup between our main office and a remote site. This will replace an internet-based VPN tunnel that currently carries traffic. However, it’s still not in use because of problems. More specifically, whenever I switch traffic to route over the dedicated link, service becomes extremely problematic. Ping to any system works, but protocols like RDP, SMB and replication (AD & Exchange) work intermittantly at best (RDP), or not at all (SMB, replication). I could RDP to ServerA, but not to ServerB, although they’re both VMs hosted on the same Hyper-V server at the remote site. And while RDP worked to the DC at the remote site and I could ping all the other DCs, repadmin /replsummary showed that replication was no longer working.

Here’s a simple topology diagram in ASCII art:

Head Office core switch - Head Office firewall - VPN Tunnel over internet - SiteA firewall - SiteA core switch

vs.

Head Office core switch - dedicated L2 link - SiteA core switch

I knew this was going to be a pain to troubleshoot, especially since I couldn’t take the whole DR site offline whenever I wanted during the day to conduct tests. I quintuple checked the configuration and didn’t find any errors. I compared it line-by-line to another site we have this same setup (dedicated link between core switches) and everything was configured correctly.

I finally decided to bite the bullet and spent a couple of late nights trying to gather information so that I could quantify the precise problem. Because the physical layer was out of my control, the data link layer seemed fine (also out of my control…mostly) and ping worked (layer 3), I was pretty confident the issue originated at the Network layer, or higher.

I started by looking at the packet sizes. I knew that ping was a tiny packet, while things like replication and SMB would probably exceed the MTU. I ran some tests with the ping -f -l to determine the maximum packet size that could successfully transit the link.

No. Time Source Destination Protocol Length ICMP Length Info

1 0.000000 10.1.0.51 192.168.4.3 ICMP 1507 1465 Echo (ping) request id=0x006b, seq=37568/49298, ttl=128 (reply in 2)

2 0.005320 192.168.4.3 10.1.0.51 ICMP 1507 1465 Echo (ping) reply id=0x006b, seq=37568/49298, ttl=126 (request in 1)

3 1.005759 10.1.0.51 192.168.4.3 ICMP 1507 1465 Echo (ping) request id=0x006b, seq=37569/49554, ttl=128 (reply in 4)

4 1.011053 192.168.4.3 10.1.0.51 ICMP 1507 1465 Echo (ping) reply id=0x006b, seq=37569/49554, ttl=126 (request in 3)

5 2.011301 10.1.0.51 192.168.4.3 ICMP 1507 1465 Echo (ping) request id=0x006b, seq=37570/49810, ttl=128 (reply in 6)

6 2.016662 192.168.4.3 10.1.0.51 ICMP 1507 1465 Echo (ping) reply id=0x006b, seq=37570/49810, ttl=126 (request in 5)

7 3.017966 10.1.0.51 192.168.4.3 ICMP 1507 1465 Echo (ping) request id=0x006b, seq=37571/50066, ttl=128 (reply in 8)

8 3.023353 192.168.4.3 10.1.0.51 ICMP 1507 1465 Echo (ping) reply id=0x006b, seq=37571/50066, ttl=126 (request in 7)

9 16.070931 10.1.0.51 192.168.4.3 ICMP 1510 1468 Echo (ping) request id=0x006b, seq=37576/51346, ttl=128 (reply in 10)

10 16.076166 192.168.4.3 10.1.0.51 ICMP 1510 1468 Echo (ping) reply id=0x006b, seq=37576/51346, ttl=126 (request in 9)

11 17.075444 10.1.0.51 192.168.4.3 ICMP 1510 1468 Echo (ping) request id=0x006b, seq=37577/51602, ttl=128 (reply in 12)

12 17.080656 192.168.4.3 10.1.0.51 ICMP 1510 1468 Echo (ping) reply id=0x006b, seq=37577/51602, ttl=126 (request in 11)

13 18.082161 10.1.0.51 192.168.4.3 ICMP 1510 1468 Echo (ping) request id=0x006b, seq=37578/51858, ttl=128 (reply in 14)

14 18.087498 192.168.4.3 10.1.0.51 ICMP 1510 1468 Echo (ping) reply id=0x006b, seq=37578/51858, ttl=126 (request in 13)

15 19.087735 10.1.0.51 192.168.4.3 ICMP 1510 1468 Echo (ping) request id=0x006b, seq=37579/52114, ttl=128 (reply in 16)

16 19.093044 192.168.4.3 10.1.0.51 ICMP 1510 1468 Echo (ping) reply id=0x006b, seq=37579/52114, ttl=126 (request in 15)

17 26.901887 10.1.0.51 192.168.4.3 ICMP 1511 1469 Echo (ping) request id=0x006b, seq=37580/52370, ttl=128 (no response found!)

18 31.753228 10.1.0.51 192.168.4.3 ICMP 1511 1469 Echo (ping) request id=0x006b, seq=37581/52626, ttl=128 (no response found!)

19 36.753276 10.1.0.51 192.168.4.3 ICMP 1511 1469 Echo (ping) request id=0x006b, seq=37582/52882, ttl=128 (no response found!)

20 41.753263 10.1.0.51 192.168.4.3 ICMP 1511 1469 Echo (ping) request id=0x006b, seq=37583/53138, ttl=128 (no response found!)

I found that where the size was <= 1468, the ping succeeded. At 1469-1472 bytes, there was no response. And at sizes >= 1473, ping helpfully told me that the Packet needs to be fragmented but DF set.

Okay! We’re making progress! …Maybe. Still working on the MTU theory, I took a look at all the network devices that these packets would transit:

- ServerA

- Hyper-V host server

- Remote site core switch … L2 Dedicated Link beyond my control…

- Head Office core switch

Both switches were set with an MTU of 1500 bytes, which is pretty standard. The Hyper-V host server used the Windows default of 1500, as did ServerA. So it looked like MTUs haven’t been altered in a meaningful way.

I ran a series of packet captures to see if anything looked unusual. Nothing jumped out at me, but I did notice that whenever the packet size exceeded 1500-ish bytes, there were lots of retranmissions and/or errors.

Wait! How is it that a server with an MTU of 1500 is sending packets that are 2600 bytes?

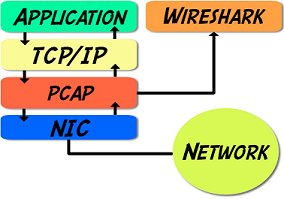

Wireshark uses winpcap (or libpcap) to grab the data before it’s handed to the NIC:

Many OS and NIC drivers support TCP Segmentation Offload / Large Segment Offload / Generic Segment Offload which offloads the task of breaking up TCP data into MSS-appropriate pieces. This is handled by the NIC, and saves resource overhead, improving performance. However… with offloading enabled, this task is now completed after Wireshark has grabbed the data, so it’s not seen when capturing from the OS. Using a SPAN port (port mirroring) or TAP would not have this limitation.

Back to my regularly scheduled commentary…

Running with the MTU theory, I decided to set the OS MTU = 1496 bytes. That’s 1468, which is the largest setting that worked with the Don’tFragment flag set, plus the 28 byte IP header. Let’s double check what the MTU is set at on my Windows 2019 DC at SiteA:

netsh int ipv4 show sub

(the full command is netsh interface ipv4 show subinterfaces, but I generally use the shorthand).

C:\Users>netsh int ipv4 show sub

MTU MediaSenseState Bytes In Bytes Out Interface

------ --------------- --------- --------- -------------

4294967295 1 0 52364 Loopback Pseudo-Interface 1

1500 1 550194231 647147510 Ethernet 2

Let’s update this:

netsh in ipv4 set sub "Ethernet 2" mtu=1496 store=persistent

(netsh interface ipv4 set subinterface “Ethernet 2” mtu=1496 store=persistent)

C:\Users>netsh int ipv4 show sub

MTU MediaSenseState Bytes In Bytes Out Interface

------ --------------- --------- --------- -------------

4294967295 1 0 52364 Loopback Pseudo-Interface 1

1496 1 55025789 647165843 Ethernet 2

On Windows 2019, this took effect immediately. If it doesn’t, a reboot should ensure it applies. AD replication - which had been failing - picked right back up as soon as I entered this command.

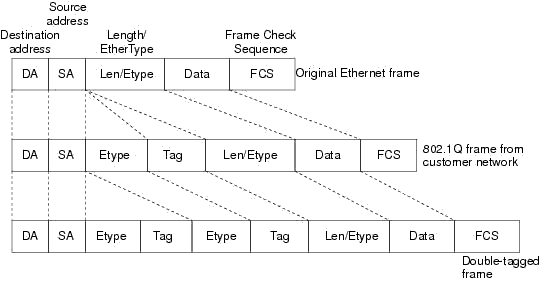

Now, I’ve proved that it’s an MTU issue, so I’ve turned it over to our L2 direct link provider. Interestingly enough, the 4 bytes (1500-1496=4) is the exact same size that a QinQ implementation adds. The packet arrives as an 802.1Q frame (tagged), and QinQ will add its own Tag & EType field in the frame. This resulting QinQ frame is often referred to as ‘double tagged’.

You can read more about QinQ in the Cisco article Inter-Switch Link and IEEE 802.1Q Frame Format